Scanning Docker images using Trivly within Azure DevOps

Index -

Container scanning using Trivy

Trivy is an open-source security scanner that can be used to find vulnerabilities and other security issues within images (such as secrets stored inside config)

In my case I integrated it into my existing CI/CD pipeline so that my Docker images are scanned for vulnerabilities before deployment

Pipeline config

To add Trivy into a pipeline there are 2 steps - installing and then running the scan

To install its simply a case of running the Linux installer

To run a scan you call the trivy image command with additional flags:

-

—exit-code 1 —severity HIGH,CRITICAL

This is used so that any high & critical vulnerabilities cause the program to exit with an error code - in turn this causes the pipeline to fail and in my config this means that the web app will not be uploaded

You can also use —exit-code 0 —severity HIGH,CRITICAL,MEDIUM,LOW to generate a report of all vulnerabilities -

$(containerRegistry)/$(imageRepository):$(tag)

This points to the image storage within the ACR (Azure Container Registry) in the format of {container registry}/{image name}:{tag}

Additionally you will need to also specify a few environmental variables using the ‘env’ section

This is used to specify the login details for my ACR - the open-source (and free) version is limited to supporting basic auth methods when pulling the image from the ACR

Paid plans additionally also support managed identity auth so you will not need to have explicit usernames & passwords

- stage: Trivy

displayName: 'Install trivy and run a scan'

jobs:

- job: Trivy

displayName: Install Trivy and run a scan

steps:

- script: |

sudo apt-get install rpm

wget https://github.com/aquasecurity/trivy/releases/download/v0.65.0/trivy_0.65.0_Linux-64bit.deb

sudo dpkg -i trivy_0.65.0_Linux-64bit.deb

trivy -v

- task: Bash@3

displayName: 'run scan'

inputs:

targetType: 'inline'

script: |

trivy image --exit-code 1 --severity HIGH,CRITICAL $(containerRegistryURL)/$(imageRepository):$(tag)

env:

TRIVY_AUTH_URL: "https://$(containerRegistryURL)"

TRIVY_USERNAME: $(containerRegistryName)

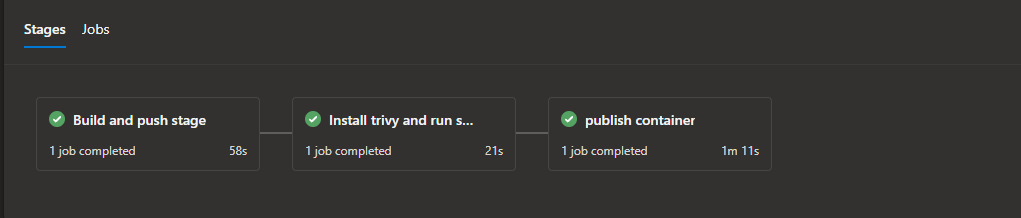

TRIVY_PASSWORD: $(containerPassword)To ensure that my pipeline exits when the scan flags up vulnerabilites I also added a dependency portion to the pubish stage so it will not run unless the scan stage succeeds

- stage: Publish

condition: succeeded('Trivy')

dependsOn: TrivyFull azure-pipelines.yml

# Docker

pool:

vmImage: 'ubuntu-latest'

resources:

- repo: self

variables:

- group: staticLib

stages:

- stage: Build

displayName: Build and push stage

jobs:

- job: Build

displayName: Build

steps:

- task: Docker@2

displayName: Build and push an image to container registry

inputs:

command: buildAndPush

repository: $(imageRepository)

dockerfile: $(dockerfilePath)

containerRegistry: $(dockerRegistryServiceConnection)

tags: |

$(tag)

- stage: Trivy

displayName: 'Install trivy and run a scan'

jobs:

- job: Trivy

displayName: Install Trivy and run a scan

steps:

- script: |

sudo apt-get install rpm

wget https://github.com/aquasecurity/trivy/releases/download/v0.65.0/trivy_0.65.0_Linux-64bit.deb

sudo dpkg -i trivy_0.65.0_Linux-64bit.deb

trivy -v

- task: Bash@3

displayName: 'run scan'

inputs:

targetType: 'inline'

script: |

trivy image --exit-code 1 --severity HIGH,CRITICAL $(containerRegistryURL)/$(imageRepository):$(tag)

env:

TRIVY_AUTH_URL: "https://$(containerRegistryURL)"

TRIVY_USERNAME: $(containerRegistryName)

TRIVY_PASSWORD: $(containerPassword)

- stage: Publish

condition: succeeded('Trivy')

dependsOn: Trivy

displayName: 'publish container'

jobs:

- job:

displayName: Publish container

steps:

- task: AzureWebAppContainer@1

displayName: 'Azure Web App on Container Deploy'

inputs:

azureSubscription: $(azureSubscription)

appName: $(appName)

containers: $(containerRegistry)/$(imageRepository):$(tag)Fixing my own vulnerabilities

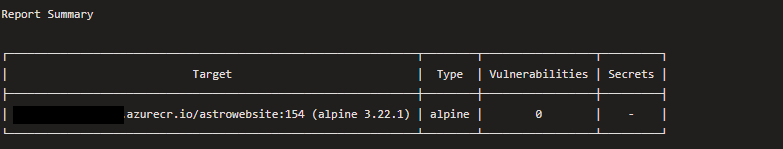

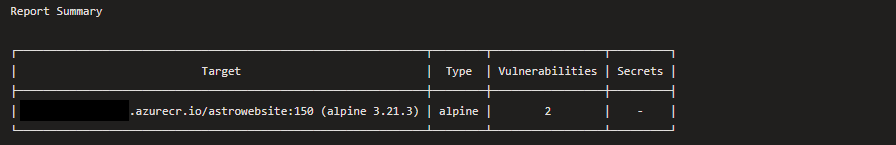

As a results of adding container scanning this revealed high vulnerabilities within my own deployment

When investigating my dockerfile I found explicit mentions to out of date versions:

FROM node:22-alpine AS builder

FROM nginx:1.27-alpine AS runtime

This was a simple fix but without any automated scanning/reporting I probably wouldn’t have found found this security flaw

FROM node:22-alpine AS builder —> FROM node:latest AS builder

FROM nginx:1.27-alpine AS runtime —> FROM nginx:mainline-alpine AS runtime

After correcting this I ran the pipeline again and it ran fully and deployed my app as expected